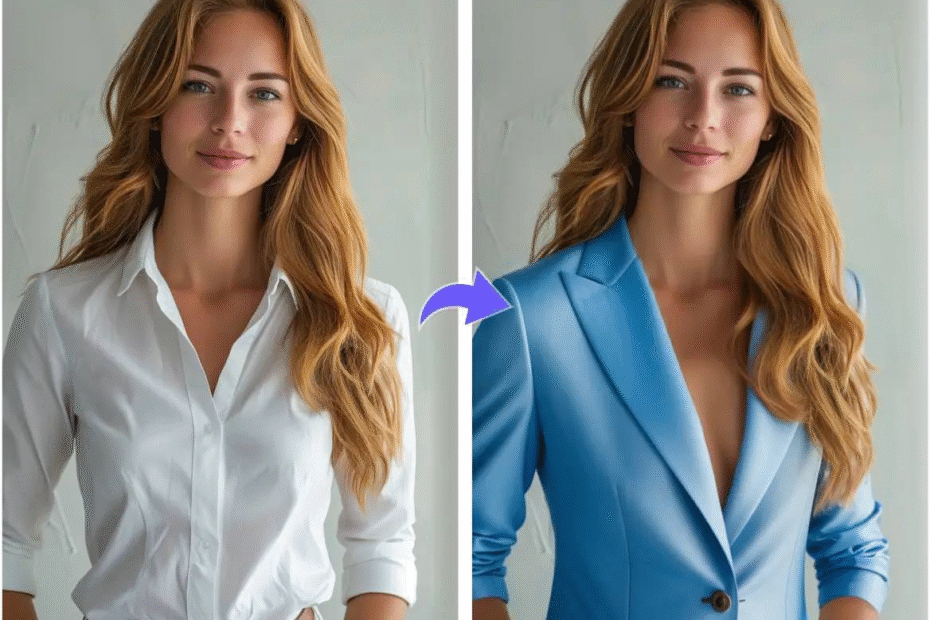

Not long ago, the capability of photo editing to enhance or alter someone’s appearance was solely in the hands of a competent professional, or at least in possession of a copy of Photoshop along with a few hours spare. Now, in this day and age, a single click is more than sufficient.

An AI program does the trick, and experience becomes irrelevant. Scanning a picture unlocks a whole new world where AI does everything for you. That’s the genuinely frightening part, and we’re already here.

One of the most talked about and disputed the most AI tools is the Free Undress AI Remover Tool, Free Undress AI Remover Tool. It seems at first glance like a filter for an app, but a more in depth analysis is very troubling.

What Precisely is the Free Undress AI Remover Tool?

This program does more than the basic functions of background removal or color alteration. It aims specifically at photographs of clothed individuals, most often women. This tool’s function is to remove garments from the pictures, instead digitally clothing them. The end product is a falsified image where the individual seems to lack clothing when in reality, they never posed in such a manner.

As expected, the “undressing” is an image produced by an AI tool. The result can be startlingly realistic. Some users can access these tools via the internet, some through secret Telegram bots, and others are downloadable apps. They maintain the utmost secrecy under the auspices of “for fun” or “for entertainment,” while we are aware of the true intent of its use.

How Does It Actually Work?

Here is a simplified version.

These tools utilize AI image generation, often built upon a framework known as GANs, or Generative Adversarial Networks. As the name suggests, it is a two-part system: one part fabricates an image while the other validates its authenticity. They rely on training data, which in this case is, thousands or millions of real photos, to teach themselves.

Thus, when a picture depicting an individual is uploaded with regular clothing, the tool attempts to “guess” what the individual looks like under the clothing. It does not actually use the person’s body; rather, it fakes a nude version by blending what it has learned to create the body using its training data.

Imagine an AI’s version of trying to guess what is behind a curtain: the result is unsettling and can be intrusive.

Why Fears Are Concerned… and Justifiably So

At first glance, the internet never ceases to baffle even the most experienced users. However, tools such as the Free Undress AI Remover Tool pose a whole different set of concerns that go beyond the surface.

To begin with, these AI tools pose a major breach of privacy. Consider the example of a bikini-clad individual relaxing on the beach, free to show the world their smile. Now, picture someone taking that image and silently running it through an AI remover tool. Now think of the ramifications this tool can have on the individual, considering that the image can be shared anywhere, be it in online groups or forums. The mental and emotional horror that this type of tool brings annihilates the image of a healthy society.

To the discussed concerns, there exists a larger problem: a vast majority of the people have no idea that such image manipulation is happening at a world scale. Once these fake images are posted online, it becomes even harder and practically impossible to erase all traces of it. These images can be stored in devices, and can be misused for the purposes of blackmail and other forms of extortion.

Example: Events That Occurred on Telegram

As many are aware, a Telegram bot was created a few years back that was capable of doing this exact task. Users were able to upload an image of a woman and the bot would respond back to them with a nude image of the woman, digitally and automatically created. Reports showed over 100,000 images were being created. An alarming number of these fakes were created using underage pictures. It was devastating from a human rights perspective, and against the law. While authorities tried to reach these individuals, the bot had already done irreparable damage.

The same type of bot kept on appearing and were immediately shut down, yet, more and more kept popping up in their places.

Infographic: Tools That Areand Manipulative Step-By-Step Processes

These tools and devices are clearly genius on an engineering and technological perspective, however, the socio-psychological damage they’re doing is painful.

Let’s put the scenario that a woman realizes that a picture of her was faked using these tools. Instead of an image of her from her life, a nude image is crafted. While she did not take that picture, nude or otherwise, others would falsely attribute it to her. The social shame, acute embarrassment, and pent up rage takes a drastic and negative toll on the victim.

As a result, she would not want to log onto social media. She would begin to lose interest in a social life. Worst of all, this will all stem from the fear that she will run into “mutual friends” that would have seen the image. Even in the best-case scenario, it results in anxiety or depression which shocks your everyday life.

Tragically, it is often children and adolescents who bear the brunt of this tragedy. To them, the virtual world is a shrine of timeless memories, be it school profiles, Snapchat, or group chats. the memories are fleeting, most of the time ephemeral, but the repercussions are indeed permanent.

The Gender Problem: Who’s Really Being Targeted?

No need to beat around the bush. This system is not a blanket solution for all users. The overwhelming number of images subjected to the Free Undress AI Remover Tool are of women, specifically women. And these women do not range from unknown, to influencer, to celebrity and finally to everyday women who engage in mundane tasks like going to class or to work.

Thus, a clear pattern of exploitation is unwillingly established and although random, does not portray the users in a fair light.

Take a look at this statistic from Sensity AI:

The singular nature of the information presented is able to effectively elucidate the topic.

Can the Law Do Anything About This?

Now this brings us to wonder: does legality come into the picture now?

At some jurisdictions, yes. For others it is in a legal gray fog. While some nations are finally coming to terms with the phenomenon of deepfakes or nude images, others are at the forefront. The UK, for example, is attempting to pass laws that seeks to criminalize the creation of explicit images without consent. While some other like Virginia and California are ahead, and already have such laws in place.

With these innovations, however, the challenge does come in. The internet is global, and does not adhere to borders.

In different regions or through anonymizing applications, tracking down the individual creating or disseminating the false images can be nearly impossible. Even when images are deleted on the original platforms, deleted versions can be found in private chats, backup servers, or dark web forums, perpetuating the circulation.

Can We Fight Back? Some Hopeful Solutions

Despite the threats presented, there are proactive measures that can be taken as well:

1. Awareness

The first step towards curbing the problem is creating awareness. The more we have discussions around tools like these, the more they will be available. Guardians and educators need to be aware about their functions and how to find protection against them.

2. Better Technology

AI-powered applications and tools for degenerating nude images are in the process of being created. Other developers are working on watermarks, tags hidden within AI-generated images that would allow platforms to identify if the images were real or staged.

3. Automated Content Moderation

Social media platforms should enhance their capabilities of moderating content on their sites. Instagram, Reddit, and X currently have them as well, but face the challenges of responding to moderation tickets in an efficient and timely manner. Victims of content impersonation often find themselves waiting for days or weeks for the content to be taken down.

4. Support Networks

Support Networks are organizations that offer legal assistance and psychological help and encompass any service that a victim may need aid with. Support Networks are organizations that offer legal assistance and psychological help and encompasses any service that a victim may need aid with. Cyber Civil Rights Initiative is a good example of the type of organization that is doing important work in this area and many others.

Staying Safe

While it’s impossible to eliminate risk entirely, these practices can help reduce your exposure:

Don’t forget to check what is available on your profile for public viewing. Make sure that you are not tagged in any high resolution images.

Avoid showing your face or any other identifiable feature of yours in close up. If possible, compose the shots to reduce presenting your head. 停 chinese

Your images can also be harder to edit with AI if you add subtle markings to them.

To monitor if your images are being misused by others, you can periodically check them with reverse search.

Always take action by capturing screenshot, reporting the abuse, and asking for help right away.

Final Thoughts

The existence of the tools used for violations, privacy breaches and humiliation is for the public to decide. In this case, the American public has to ask this question: is there a need for free AI undress tools?

While AI’s positive impacts on society such as in the fields of medicine, art, education, and other spheres are apparent, the misuse of AI for privacy and dignity breaches is a challenge that society should nip in the bud without question.

- If your concern lies in this matter, here’s your course of action:

- Discuss this matter with your family, friends, or social media platforms.

- Aid in informing others, particularly young women and adolescents, about the dangers.

Advocate for policy changes that demand greater accountability, enhanced tech safeguards, and more stringent rules on the platforms.

FAQS

1. What is the Free Undress AI Remover Tool?

The Free Undress AI Remover Tool is an example of a web application that, with the help of a software, creates faux nude photographs of individuals by removing their garments through an algorithm. It utilizes AI algorithms to extrapolate underneath a person’s attire with a probabilistic guess based on numerous photographs it has encountered.

2. Is the Free Undress AI Remover Tool real?

Indeed, it is real, unfortunately. There is a myriad of online bots and tools that provide this functionality disguised as mindless fun or entertainment, but their output can be truly detrimental and invasive.

3. What is the technical workflow of the tool?

The tool incorporates AI and Deep Learning technologies, leveraging specifically the Generative Adversarial Networks (GANs) technique. These models examine the original photograph, identify outlines, skin hues, and textures before creating a new synthetic image that seems to be unclothed. What is generated is not a real photograph, but an AI-created and simulated image.

4. Can AI remove clothing from images?

No, it does not “remove” clothes from an image. It does generate a new fictitious image that depicts an unclothed individual. It is not the actual body of the individual but a created version that undergoes a morphing process which relies on visual predictions and pattern recognition algorithms.

5. Are such images generated from this tool authentic or fabricated?

The images are fabricated. AI has, however, hypothesized and visualized data to produce the images. The manner in which the images are generated makes them appear authentic, which is a factor that puts the images in the category of danger and harm.

6. Is the use of such tools permissible?

The tools are not permissible in places where a person who does not have explicit consent to images is capturing or distributing such images. In other places, such acts are legal. Some jurisdictions have explicit laws for the distribution of deepfake pornography or AI created nudes, and other jurisdictions are still in the process to catch up with technology.

7. What are the risks of using or sharing fake AI nudes?

Legal repercussions. There are possible offenses.

Emotional trauma to victims

Harm to reputation

Sustained Social Media Bans or Suspension of Accounts

Issues of personal trust and relationships

There is and violation of privacy and dignity through the non-consensual sharing of these images.

8. Can victims report fake nude images created by AI?

Yes. Victims may:

Report the content to the service hosting the content (Instagram, Reddit, Telegram, etc.)

Capture relevant screenshots,

Engage local police,

Reach out to image-based abuse advocacy organizations,

9. How can I check if my photos are used in some of these tools?

Tracking AI fakes is nearly impossible, but you can check with Google Images or TinEye to reverse image search to see if your images are showing in places you do not expect. Also, look out for strange interactions or messages directed toward you on social media.

10. What should I do if I am a victim of AI-generated nudes?

Document the evidence (screenshots, links),

- Report the images to relevant platforms for sharing,

- Engage a cybercrime unit or local law enforcement,

- Seek legal assistance or psychological support if necessary,

- Rally support from trusted friends or family.