June 15, 2025 | TechThrilled Newsroom

In a decisive move that underscores the growing urgency to regulate artificial intelligence technologies, the New York State Legislature has passed a landmark bill aimed at preventing AI-fueled disasters. The legislation, formally titled the AI Risk Prevention and Accountability Act (AIRPA), represents one of the most comprehensive efforts by a U.S. state to mitigate the unintended consequences of advanced AI systems across public and private sectors.

Signed into law by Governor Kathy Hochul after a swift but thorough legislative process, the bill lays out a multi-faceted framework to identify, monitor, and regulate the deployment of artificial intelligence, particularly in areas deemed “high risk,” such as transportation, critical infrastructure, law enforcement, finance, healthcare, and public communication platforms.

Background: The Rising Risk of AI-Driven Catastrophes

The AIRPA bill follows a series of recent incidents and near-misses where autonomous systems, deepfakes, AI-driven misinformation campaigns, and flawed predictive analytics posed significant threats to public safety, economic stability, and civil liberties.

Among the most notable cases influencing the legislation:

- A 2024 drone malfunction in upstate New York that caused property damage due to misinterpreted instructions by a self-learning flight system.

- A deepfake emergency broadcast hoax that falsely triggered mass panic in parts of Manhattan.

- AI-led policing tools misidentifying individuals based on biased datasets, leading to wrongful detentions.

- Hospital networks experimenting with AI triage systems that reportedly deprioritized minority patients due to historical data bias.

The bill’s sponsors argue that while AI holds immense potential, the current pace of development and deployment—largely driven by private tech companies—poses substantial risk without proper oversight, testing, and accountability mechanisms.

Overview of the AI Risk Prevention and Accountability Act

The AIRPA bill establishes a multi-tiered oversight and response structure, empowering the state to preemptively act against AI systems that could cause harm to the public or infrastructure. Key provisions include:

1. AI Risk Classification System

All AI systems operating within the state must be categorized as:

- Low-risk (e.g., productivity tools)

- Moderate-risk (e.g., health monitoring apps)

- High-risk (e.g., autonomous vehicles, public surveillance AI, law enforcement tools)

Any system deemed high-risk must undergo mandatory safety testing, ethical review, and licensing before deployment.

2. State AI Oversight Board (SAIOB)

A newly created agency under the NYS Department of Technology will:

- Audit high-risk AI applications

- Maintain a registry of AI systems in use across state agencies and municipalities

- Oversee ethics compliance and environmental impact assessments

- Collaborate with academic institutions for independent technical evaluations

3. Mandatory Incident Reporting

All public and private entities must report AI malfunctions, failures, or dangerous outputs within 72 hours. Noncompliance can result in fines, suspension of operations, or public blacklisting.

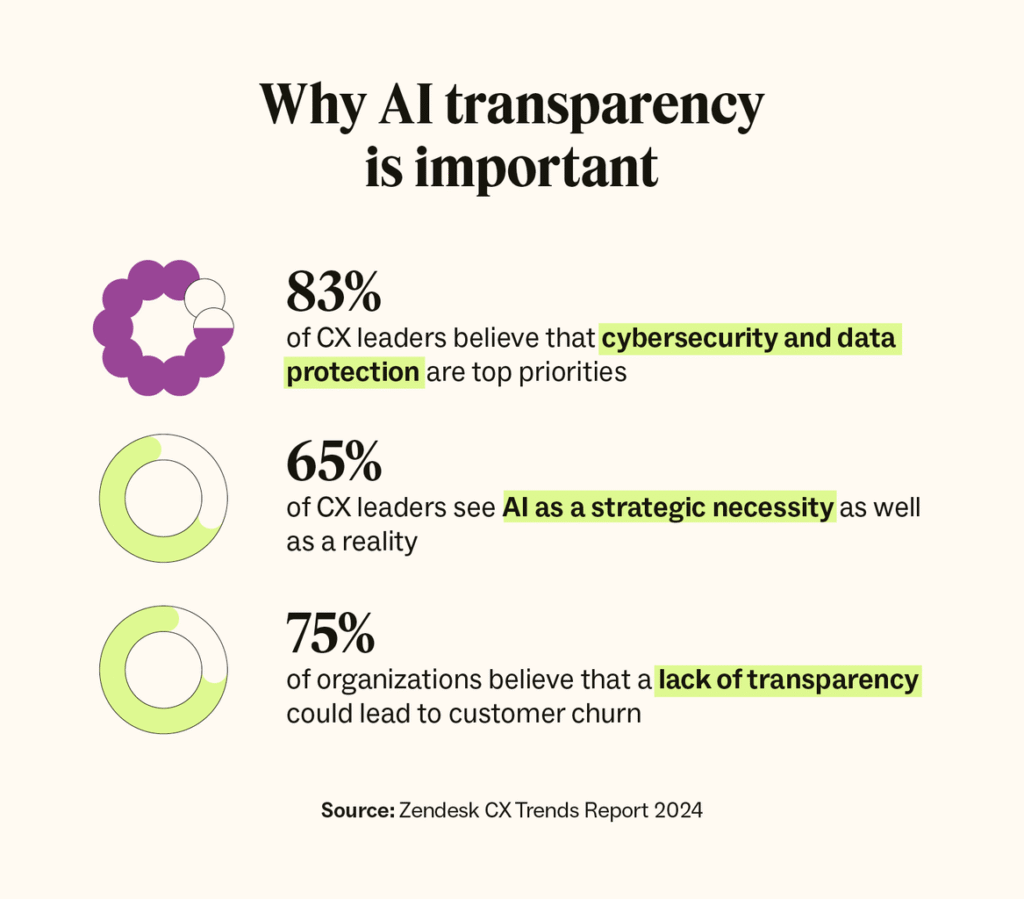

4. AI Ethics and Transparency Standards

Developers and deployers of AI must:

- Disclose training data sources and model limitations

- Implement human-in-the-loop fail-safes for critical decisions

- Provide accessible explanations for AI decisions affecting individuals

5. Emergency Intervention Authority

In the event of an AI-related emergency, the Governor may invoke a statewide AI Pause Order, halting specific systems until safety evaluations are completed.

Legislative Support and Motivations

The bill passed with bipartisan support. Lead sponsor State Senator Rachel Menendez (D-Brooklyn) emphasized the need for “regulation that doesn’t stifle innovation but safeguards the public from digital catastrophe.”

“We’ve seen what unregulated AI can do. From deepfake elections to biased law enforcement tools, the harm is real. New York has an obligation to lead with laws that match the scale and speed of technological change.”

Republican lawmakers who supported the bill focused on national security and economic resilience, highlighting the risk of foreign entities manipulating AI systems or infrastructure reliant on third-party code.

Industry Reaction: Mixed But Cooperative

Tech companies and AI developers based in New York have issued cautiously supportive statements, acknowledging the need for regulation but raising concerns about overreach.

Syntrix AI, a leading AI solutions firm in Manhattan, stated:

“While we welcome guardrails that enhance safety and trust, we hope implementation is pragmatic and involves regular consultation with technical stakeholders.”

AI Now Institute director Dr. Meredith Wong praised the bill’s inclusion of algorithmic transparency requirements and its ethical review board, calling it “the strongest U.S. state-level AI governance measure to date.”

However, smaller startups expressed concern over the compliance costs and legal uncertainty around what constitutes a “high-risk” system, particularly for new AI products under development.

To address these concerns, the bill includes a grace period of 180 days before full enforcement begins, and a startup advisory desk will be established within SAIOB to help early-stage ventures navigate the law.

Public Impact: How the Law Will Affect New Yorkers

For the general public, AIRPA promises several immediate and long-term benefits:

- Improved safety standards for AI in public transit, healthcare, and emergency response.

- Greater accountability for law enforcement tools that may affect due process.

- Right to explanation: Individuals affected by AI-driven decisions in employment, finance, or government services will receive clear explanations and options for recourse.

- Protection against deepfakes: Media outlets must label AI-generated content in news or political campaigns, or face legal penalties.

Civic groups and digital rights organizations like the New York Civil Liberties Union (NYCLU) supported the move, emphasizing that transparency and fairness in algorithmic decision-making are “essential to maintaining public trust in emerging technologies.”

Potential to Influence National and Global Policy

New York’s AI bill could serve as a model for other states, and even influence federal legislation. Congressional leaders have already expressed interest in New York’s approach as a potential template for national AI safety frameworks.

Globally, the bill draws parallels with the European Union’s AI Act, though New York’s version is more targeted toward operational safety and disaster prevention. By combining technical standards with ethical oversight, the AIRPA law positions New York as a global regulatory innovator in the AI space.

International policy analysts suggest that other tech-forward states—like California, Massachusetts, and Washington—may soon introduce similar bills to address growing public concern over AI’s unchecked growth.

What Comes Next: Implementation and Oversight

Now that the bill has been signed into law, the New York State AI Oversight Board will be formed within 90 days. Its early tasks will include:

- Creating a risk-rating system for existing AI deployments

- Releasing implementation guidelines for public and private sectors

- Hosting public town halls to inform New Yorkers about their new AI rights and protections

Additionally, AI literacy programs will be rolled out across high schools, public libraries, and municipal training centers to ensure that citizens, especially marginalized groups, are informed and equipped to navigate AI-augmented environments.

Conclusion: A Defining Moment for AI Governance

The passage of the AI Risk Prevention and Accountability Act marks a turning point in how states approach emerging technologies. Rather than waiting for crises to unfold, New York is opting for a proactive, principle-based approach to AI governance—placing ethics, transparency, and human safety at the core of innovation.

This move may not solve every challenge AI presents, but it builds a foundational layer of trust and accountability essential for the responsible integration of AI into daily life.

By balancing innovation with precaution, New York is sending a powerful message: technology must work for the people, not just profit or power. As AI continues to transform societies, laws like AIRPA may become the bedrock of a safer, more equitable digital future.