Imagine describing a dream you had—a city floating in the clouds, a desert where plants sing, or a retro game level made of jelly—and seeing it instantly become a playable video game. No coding, no design software, just words. That’s the kind of future Google DeepMind is building with Genie 3, the latest evolution of its groundbreaking AI tool for video game generation. While most people know DeepMind for its victories in strategy games like Go and protein folding with AlphaFold, Genie 3 takes a wildly different path. It’s not just about solving problems; it’s about creating worlds.

In a way, Genie 3 feels more like magic than machine learning. But under the surface, it’s driven by one of the most advanced visual generative models ever built—a system that can take a single image or short prompt and generate entire playable 2D environments that mimic classic video game physics. While the concept sounds futuristic, the tech behind Genie 3 is rooted in years of steady progress in generative AI, computer vision, and reinforcement learning.

Let’s dive deep into what Genie 3 is, how it works, what makes it special, and what it might mean for the future of games, creativity, and AI.

What Is Genie 3?

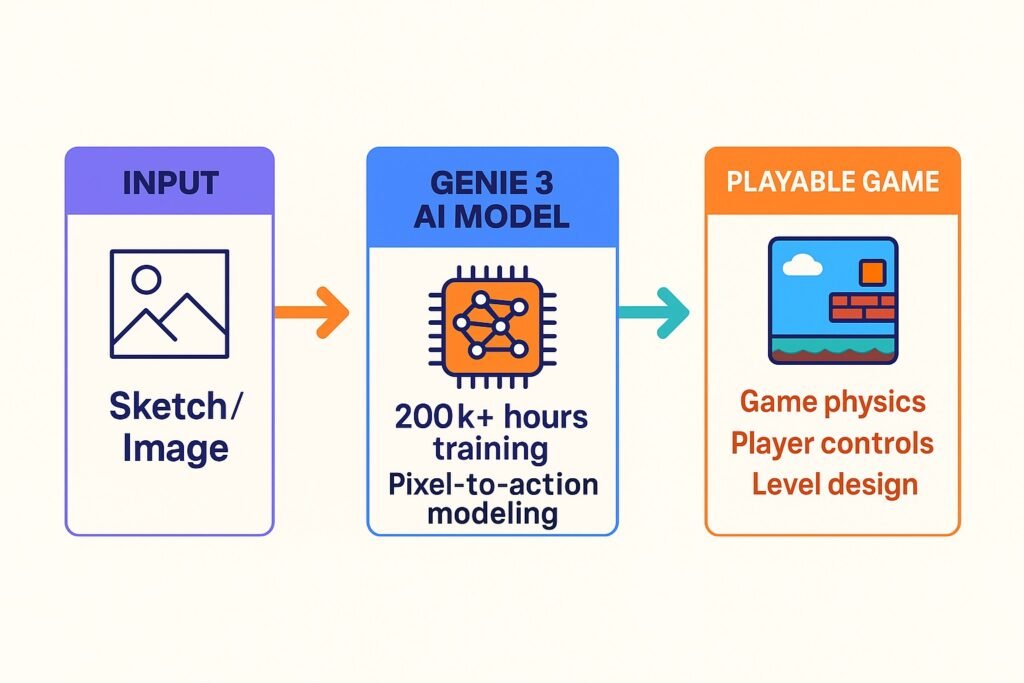

Genie 3 is an artificial intelligence model developed by Google DeepMind that transforms visual prompts—like images or sketches—into interactive, playable 2D video games. Unlike traditional game development tools that require game engines, physics coding, and visual asset creation, Genie 3 automates the entire process.

The core magic of Genie 3 lies in its ability to learn game dynamics from watching tons of online videos—especially old-school platformers and 2D arcade games. The model doesn’t just see pixels; it interprets game logic, player behavior, level design, object physics, and even the emotional arc of gameplay. Based on that understanding, it learns how to simulate similar games without any pre-built engine.

But why is this important? Because Genie 3 represents a radical shift in the relationship between creators and technology. Anyone—from a game designer to a curious teenager—can describe or draw a scene, and Genie 3 can bring it to life as an actual game. It’s democratizing creativity by removing the technical barriers.

How Does Genie 3 Work?

At its heart, Genie 3 is a foundation model trained on more than 200,000 hours of gameplay videos. These videos were sourced from the internet, spanning various 2D games—from classics like Super Mario and Mega Man to indie pixel art adventures. Genie 3 watches these games not like a human playing for fun but like a scientist analyzing every detail.

The training process uses a combination of unsupervised learning and self-supervised modeling, which means Genie 3 teaches itself the rules of how games work without being explicitly told. For instance, it learns that gravity pulls the character down, that spikes cause death, and that a door might lead to the next level. These aren’t programmed—they’re learned from watching patterns.

Once trained, Genie 3 takes a static input image—like a hand-drawn sketch, a digital painting, or even a photo of a room—and renders it into an interactive environment. Then it adds player controls, physics logic, and object behaviors, enabling users to actually move through and interact with the world.

Here’s what’s unique:

- No pre-defined engine: Genie 3 doesn’t use Unity or Unreal. It creates a physics simulation based on the image alone.

- One-shot generation: The AI can build an entire playable level from a single frame.

- Physics-aware: It learns to replicate game physics like jumping, collision, and movement.

- Pixel-to-action understanding: Genie 3 connects what it sees (pixels) with what a player can do (actions).

This combination of visual perception + interactivity + self-learning makes Genie 3 one of the most powerful generative AI systems for games ever made.

Why It’s a Big Deal

The implications of Genie 3 go far beyond just making games. Think about the broader idea: an AI that can understand how a world works just by watching videos, and then recreate it in real-time.

For education, Genie 3 could be used to build interactive learning environments. Instead of watching videos about ecosystems or history, students could explore those subjects through AI-generated games.

In art and storytelling, writers and visual artists could use it to quickly prototype worlds from their narratives. A comic panel could become a game level. A children’s story illustration could turn into a sandbox adventure.

Game development itself could become more accessible. Small studios, solo developers, or even fans could create remixed versions of their favorite games by feeding in just a drawing or an idea. Genie 3 doesn’t replace game design; it amplifies it.

Let’s not forget the accessibility angle. For those with limited technical knowledge or physical disabilities, building games through text and images could open up entirely new creative careers and experiences.

A Glimpse at Genie 3 in Action

To make this real, imagine a child drawing a robot on a piece of paper. They upload it into Genie 3. The model interprets it as a game character. The AI adds a background, maybe a cyber-city, some floating platforms, obstacles, and even a goal—say, collect batteries and power the robot’s city. Within seconds, the drawing becomes a game.

Or consider a digital artist who paints a dreamlike forest. Genie 3 turns it into a side-scrolling platformer where players swing from glowing vines and solve puzzles. No code, no mechanics. Just imagination and interaction.

What Makes Genie 3 Different from Other AIs?

While many AI tools can generate content—like images with DALL·E or text with ChatGPT—Genie 3 goes further by combining generation with simulation. This makes it multimodal, blending vision, movement, interaction, and physics into a single experience.

Some other differences:

- Generative agents vs. passive outputs: Most generative models create content for viewing (text, art). Genie 3 creates content for playing.

- Physics simulation from pixels: Genie 3 isn’t told how gravity works. It infers physics from visual cues and behavior patterns.

- Dynamic interactivity: It builds logic into environments, meaning they respond to player inputs—something traditional visual AIs can’t do.

Challenges and Limitations

Of course, Genie 3 isn’t perfect. There are still many limitations that DeepMind and researchers acknowledge:

- 2D only (for now): Genie 3 works best in 2D pixel-style environments. Full 3D interactivity remains a technical leap.

- No complex storytelling: It can build environments, but it doesn’t write narratives or quests yet.

- Physics can be unpredictable: Since the AI learns from examples, some generated worlds have quirky or broken mechanics.

- Not production-ready: Genie 3 is still in research mode. Public tools or APIs haven’t been released yet.

That said, the progress so far suggests that these hurdles are surmountable—and future versions may incorporate more features like 3D scenes, character dialogue, or multi-level game architecture.

Comparison Chart: Genie 3 vs Traditional Game Development

| Feature | Traditional Game Dev | Genie 3 |

|---|---|---|

| Requires coding | ✅ Yes | ❌ No |

| Needs a game engine | ✅ Unity, Unreal | ❌ None |

| Physics implementation | ✅ Manual | ✅ Learned |

| Generates from sketch | ❌ No | ✅ Yes |

| Interactivity | ✅ Yes | ✅ Yes |

| Multiplayer | ✅ Possible | ❌ Not yet |

| Time to build | ⏳ Weeks/Months | ⚡ Seconds |

The Future of Genie 3

Looking ahead, Genie 3 might serve as a foundation for a completely new class of creative tools. Think of it as the early Photoshop for interactive worlds. DeepMind is exploring ideas like:

- Integration with VR/AR, allowing users to walk through imagined environments.

- Text-to-game generation, where a sentence like “A wizard flying over lava caves” becomes a playable level.

- AI co-creation, where users and AI work together to refine and evolve levels through feedback.

- Educational game design, where kids learn logic, physics, and storytelling while building games visually.

Genie 3 might also merge with other DeepMind technologies like AlphaCode (for AI coding) or Gemini (for reasoning), creating a unified platform for both generating and evolving intelligent, playable systems.

Conclusion: Why Genie 3 Matters

At first glance, Genie 3 might seem like a fun experiment—a cool way to turn pictures into pixel games. But it’s much more than that. It’s a glimpse into the future of how we interact with technology. With Genie 3, creation becomes intuitive, imagination becomes executable, and anyone can be a game designer.

It’s easy to underestimate what this means. For decades, making games has been a highly technical task. But Genie 3 breaks that barrier, much like how digital cameras made everyone a photographer or how blogging made everyone a writer.

We’re entering an era where “playable creativity” becomes a new form of expression. And Genie 3 is leading the charge—not just for AI researchers, but for artists, storytellers, educators, and dreamers around the world.

🧠 FAQ Section

Q: Is Genie 3 available to the public?

No, as of now, Genie 3 is a research project by Google DeepMind. A public version hasn’t been released yet.

Q: Can Genie 3 generate 3D games?

Currently, it only supports 2D game environments. However, future updates may introduce 3D capabilities.

Q: Do you need to know coding to use Genie 3?

No. The goal is to make game creation as simple as providing an image or prompt—no coding required.

📣 Call to Action

Curious about the future of AI, games, and creativity? Stay ahead with the latest deep dives like this one by subscribing to our Tech Thrilled newsletter. Share this article with a fellow creator, and let’s imagine the worlds we can build—one pixel at a time.